OpenAI API Integration: Best Practices

OpenAI API Integration: Best Practices

The OpenAI API makes it easy for businesses to use advanced AI models like GPT for text, DALL-E for images, and Codex for code. This article outlines key steps for successful integration, focusing on:

- Security: Protect API keys by storing them as environment variables, rotating them regularly, and assigning unique keys to team members.

- Scalability: Use cloud solutions such as Azure with Kubernetes for handling large-scale operations. Implement load balancing and optimize infrastructure for growth.

- Cost Management: Monitor token usage, set budget alerts, and choose models (e.g., GPT-3.5 Turbo for cost savings) based on task requirements.

- Fault Tolerance: Apply techniques like retries with exponential backoff, circuit breakers, and dead letter queues to ensure reliability during failures.

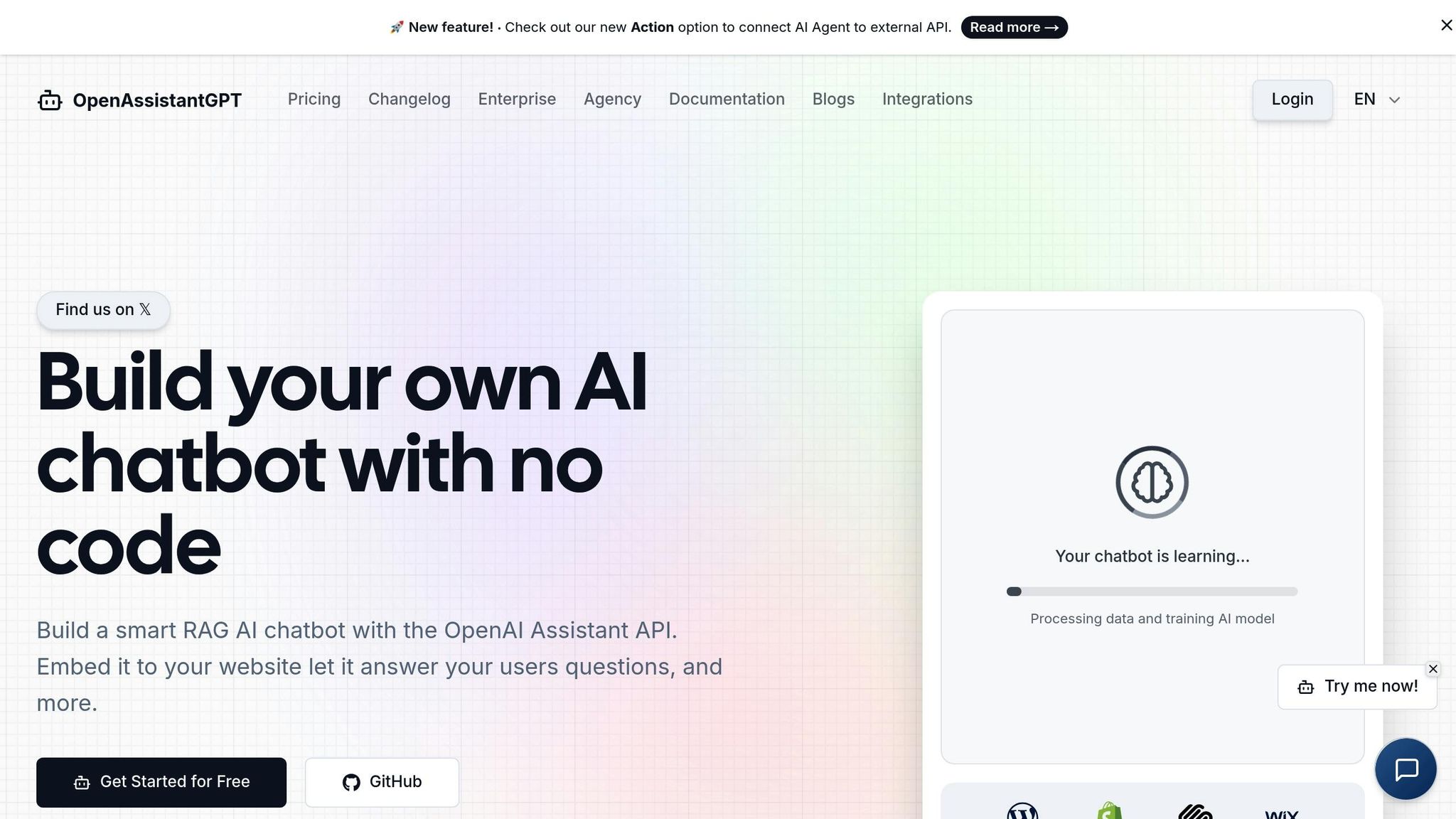

- No-Code Solutions: Platforms like OpenAssistantGPT simplify chatbot creation, offering features like web crawling, file analysis, and API querying.

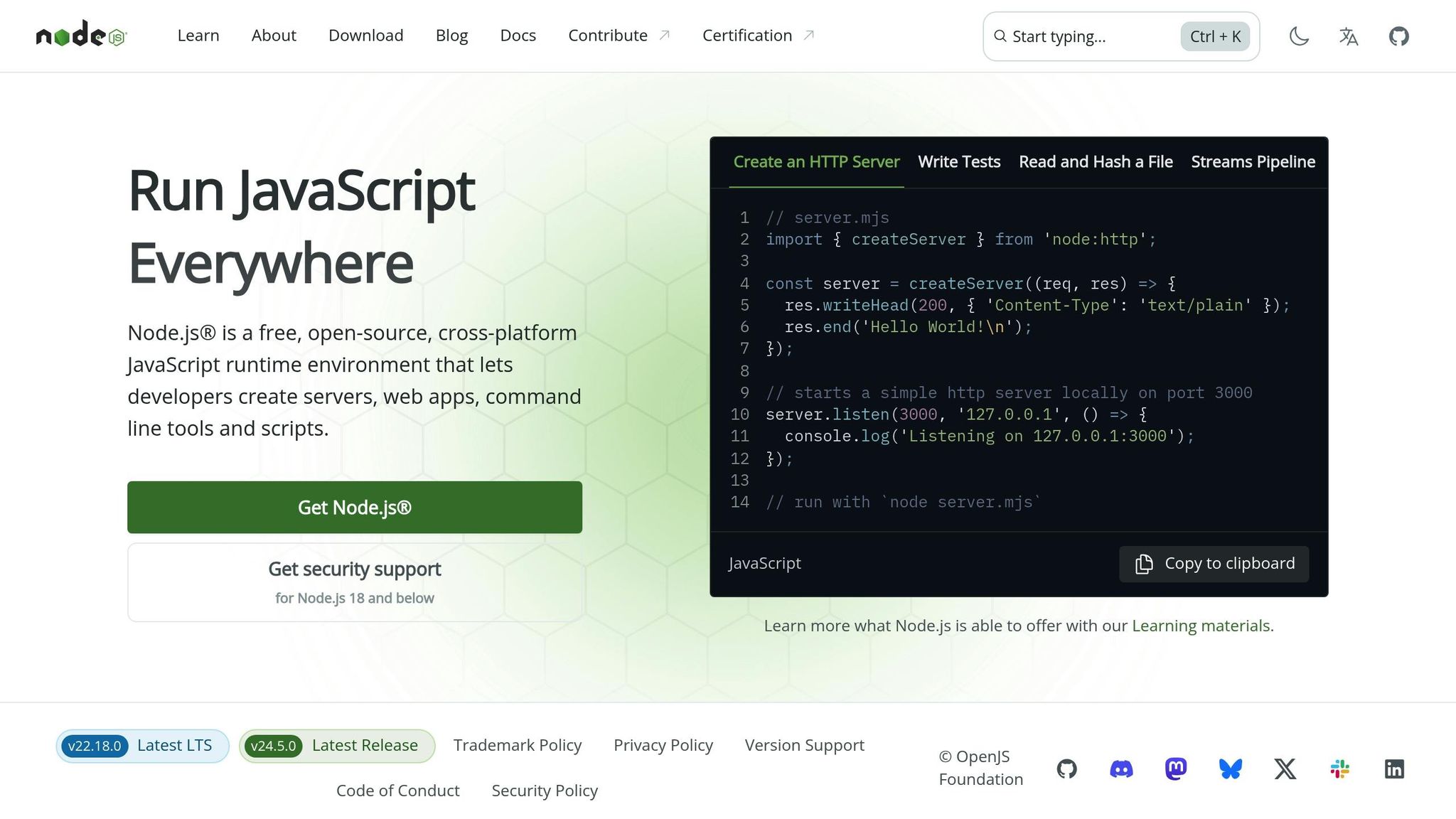

How to Integrate ChatGPT with Node.js App using the OpenAI API

Core Principles of Secure and Scalable API Integration

Successfully integrating OpenAI's API requires a strong focus on secure key management, scalable infrastructure, and effective cost control. These principles not only protect your integration but also ensure that your data pipelines are reliable and efficient.

API Key Management

Your API key is the key to OpenAI's services, and keeping it secure is non-negotiable. As the OpenAI Help Center explains:

"An API key is a unique code that identifies your requests to the API. Your API key is intended to be used by you. The sharing of API keys is against the Terms of Use."

It's critical to avoid embedding API keys directly into your code. Instead, store them securely as environment variables on your server. For instance, in Python, you can retrieve your API key using os.getenv('OPENAI_API_KEY').

Assign unique API keys to each team member rather than sharing a single key across the organization. This makes it easier to monitor usage patterns and revoke access when roles change or team members leave.

Regularly rotate your API keys and monitor logs for unusual activity. This allows you to quickly identify and disable compromised keys. Limit the permissions of your API keys to only what’s necessary for specific tasks, using restricted scopes to enforce these limitations.

Once your keys are secure, the next step is ensuring your infrastructure can handle API demands effectively.

Infrastructure and Scalability Considerations

OpenAI's operations highlight the scale required for advanced AI. By 2021, OpenAI expanded its Kubernetes infrastructure to 7,500 nodes and utilized tens of thousands of NVIDIA A100 GPUs for its supercomputer. This level of scaling underscores the importance of planning for growth.

Cloud-based solutions are ideal for handling scaling needs. OpenAI’s collaboration with Azure as its exclusive cloud provider enables businesses to deploy AI services efficiently. For U.S.-based deployments, Azure OpenAI services offer options like Provisioned Throughput Units (PTUs) for consistent performance, with Pay-as-you-go (PAYG) covering traffic beyond PTU limits.

To manage traffic flow, use ingress and egress gateways. Ingress gateways regulate external access to your AI APIs, while egress gateways manage how your internal systems consume external services.

Dynamic load balancing is another essential tool. Instead of relying solely on round-robin distribution, direct traffic to OpenAI backends based on priority to avoid throttling. Consolidating Azure workloads under a single subscription and maintaining one Azure OpenAI resource per region can streamline management.

Start small with a Kubernetes cluster and expand as your needs grow. To maintain consistency and efficiency, adopt Infrastructure as Code (IaC) tools like Terraform. Optimize networking for large clusters, implement GPU health checks, and ensure robust management practices.

With infrastructure in place, managing costs becomes the next priority.

Cost Monitoring and Usage Optimization

OpenAI’s pricing model requires careful attention to avoid unexpected charges. A token, roughly equivalent to four characters, is the basis for pricing. For reference, 1,000 tokens are approximately 750 words.

Understanding the Pricing Models

Here’s a breakdown of OpenAI’s pricing for different models:

| Model | Input Cost (per 1M tokens) | Output Cost (per 1M tokens) |

|---|---|---|

| GPT-4o | $5.00 | $15.00 |

| GPT-4 Turbo | $10.00 | $30.00 |

| GPT-3.5 Turbo | $0.50 | $1.50 |

Monitor and Optimize Usage

Set up budget alerts to track spending and review usage metrics like token consumption and request volume regularly. OpenAI offers a real-time dashboard for monitoring these details.

Batch API requests where possible. For example, combining multiple non-urgent requests into a single call can reduce costs by up to 50%. Use caching to avoid redundant calls and implement rate limiting to prevent excessive usage. For tasks that don’t require cutting-edge capabilities, older models may be a cost-effective alternative.

Strategic Model Selection

Selecting the right model is key. GPT-4o offers a balance of speed and cost, making it suitable for many applications. For simpler tasks, GPT-3.5 Turbo provides even greater savings. Additionally, refine your prompt engineering to minimize token usage without sacrificing quality.

Token-Based Rate Limiting

Token-based rate limiting aligns with OpenAI’s pricing structure, offering better cost predictability compared to request-based limits. This approach ensures fair resource allocation across applications.

As Ajeet Raina from Collabnix notes:

"The OpenAI API unlocks a world of possibilities, empowering developers to integrate powerful large language models into their applications. But with great power comes great responsibility, especially when it comes to securing your OpenAI API keys."

Building Fault-Tolerant Data Pipelines

Integrating OpenAI's API into your data pipelines can come with challenges like timeouts, rate limits, and service interruptions. To ensure smooth operations, it’s essential to design systems that can recover automatically from these hiccups.

As Benjamin Kennady, Cloud Solutions Architect at Striim, puts it:

"A data pipeline can be thought of as the flow of logic that results in an organization being able to answer a specific question or questions on that data".

The reliability of your pipeline directly affects your ability to extract actionable insights. Let’s explore how to build resilience into each component.

Error Handling Techniques

When dealing with temporary errors, exponential backoff is a go-to strategy. If you encounter a rate limit or timeout, retrying with progressively longer delays can help. For example, Python’s tenacity library simplifies this process:

@retry(wait=wait_exponential(multiplier=1, min=4, max=10))

Circuit breakers are another powerful tool. They temporarily stop requests to a failing service after a set number of errors. This pause allows the service to recover while your pipeline continues processing other tasks.

For errors that persist despite retries, Dead Letter Queues (DLQs) are invaluable. These queues store unprocessable data for later review, ensuring nothing gets lost. As Donal Tobin from Integrate.io explains:

"Proper retry depth configuration prevents data loss while optimizing system resource usage during transformation failures".

"Retry depth should be balanced with timeout settings to ensure failed jobs don't block the entire pipeline indefinitely".

To further enhance reliability:

- Ensure idempotency, so reprocessing data doesn’t create duplicates or inconsistencies.

- Break long jobs into smaller chunks - such as 10-minute segments - to limit the impact of failures and speed up recovery.

These techniques create a solid foundation for effective error handling, which directly supports better logging and monitoring.

Logging and Monitoring

Good logging practices turn debugging from a frustrating guessing game into a structured process. According to the 2022 DevOps Pulse survey, 64% of respondents reported taking over an hour to resolve production incidents. Centralized logging can significantly cut down this time.

Structured Logging makes logs easier to process and analyze. Franz Knupfer, Senior Manager at New Relic, highlights:

"Structured logs are designed to be much easier for machines to read, which also makes it much easier to automate processes and save time".

By using a consistent JSON format with fields like timestamp, log level, service name, and error details, you can automate log analysis and correlate issues across your entire system.

Centralized Log Collection brings all your logs into one place, creating a single source of truth. Xcitium explains:

"Centralized logging brings all your system, application, and security logs into one place - giving your team a single source of truth to detect issues, analyze performance, and maintain compliance".

For example, in July 2025, TOM, a fintech company in Turkey, implemented a centralized logging system using Kafka and the ELK stack. They developed custom middleware with Python, containerized in Docker, to route logs to Kafka, email, Slack, or a database. This setup allowed their teams to focus on critical tasks without being overwhelmed by log management.

Smart Alerting can prevent alert fatigue while ensuring critical issues are addressed quickly. For instance, you could configure alerts to trigger only when API error rates exceed 5% over a 5-minute window. Notifications can be tailored to severity, with critical alerts sent via phone or SMS and lower-priority issues routed through Slack or email.

To manage costs, log sampling is an effective strategy. One e-commerce company reduced storage expenses by 70% by sampling just 10% of its logs while retaining all error and warning messages.

Monitoring key metrics like log volume trends, processing latency, and storage usage ensures your logging system stays healthy. Sagar Navroop, a Cloud Architect, advises:

"Effective log management requires monitoring key metrics to ensure system health and performance".

Setting alerts for unexpected spikes in log volume can help you catch and resolve issues early.

Comparison of Fault Tolerance Techniques

Each fault tolerance method offers unique benefits, so selecting the right approach depends on your specific needs.

| Technique | Best Use Case | Pros | Cons | Implementation Notes |

|---|---|---|---|---|

| Exponential Backoff | Rate limiting and transient network issues | Reduces server load, enables auto-recovery | May delay processing; requires timeouts | Start with a 4-second delay, cap at 10 seconds |

| Circuit Breaker | Persistent API failures and outages | Prevents cascading failures | Requires careful threshold tuning | Monitor failures over 1–2 minute windows |

| Dead Letter Queue | Unprocessable messages or data corruption | Preserves data for manual review | Needs a separate workflow | Set retention based on your SLA |

| Idempotency | Retry scenarios, duplicate prevention | Ensures consistent results | Adds development complexity | Use unique request IDs to avoid duplicates |

The growing importance of monitoring systems is reflected in the data logger market, which is projected to expand at a 7.60% CAGR through 2029. As your OpenAI API integration scales, adopting these techniques will help maintain reliability and earn user trust.

Tailor your approach based on your system’s needs. For example, financial applications might prioritize data consistency through idempotency, while content generation systems could focus on cost-efficiency with smart retry logic.

sbb-itb-7a6b5a0

Using No-Code Platforms for OpenAI Chatbot Integration

Creating OpenAI-powered chatbots no longer has to be a daunting task. Thanks to no-code platforms, businesses can now bridge the gap between advanced AI technology and practical applications. These tools empower teams without programming expertise to design and deploy sophisticated chatbots with ease.

No-code solutions address a common challenge for many organizations: limited technical resources. According to industry research, these platforms allow for quick integration without the need for coding, enabling small businesses to embrace AI technology effectively. This shift opens the door for domain experts to build tailored AI solutions themselves.

Another major advantage? No-code platforms drastically reduce deployment time - from weeks to just hours. This efficiency paves the way for exploring the capabilities of tools like OpenAssistantGPT.

Key Features of OpenAssistantGPT

OpenAssistantGPT showcases how no-code platforms can simplify the use of OpenAI's Assistant API. This platform goes beyond basic chatbot functionality, offering advanced features that enhance usability and performance.

- Web Crawling: Automatically extract and process content from websites, ensuring that resources like product pages or support documentation stay up-to-date without requiring manual input.

- File Analysis: Handle various document formats, including CSV files, XML documents, and even images. This makes it easy to reference technical documents, product details, or visual content in real time.

- API Querying: Leverage AI Agent Actions to connect chatbots with external systems and databases. This allows for live data retrieval from CRMs, inventory systems, or other third-party tools, providing accurate and timely responses.

- Lead Collection: Engage visitors in natural conversations to capture contact information when they show interest in products or services. This feature seamlessly integrates new leads into sales and marketing workflows.

OpenAssistantGPT supports models like GPT-4, GPT-3.5, and GPT-4o, offering flexibility in balancing cost and capability. While Chat Completions are ideal for quick, one-off responses, Assistants excel in handling complex, ongoing interactions.

Security and Compliance for Chatbots

Security remains a top concern for chatbot adoption, with 73% of consumers expressing worries about data privacy when interacting with chatbots. These concerns are valid - improper data handling can lead to severe penalties, such as fines under GDPR regulations that can reach €20 million or 4% of global annual revenue.

OpenAssistantGPT prioritizes security with enterprise-grade features. The platform supports SAML/SSO authentication, enabling organizations to integrate chatbot access with existing identity management systems. These measures complement secure API key management and fault-tolerant data pipelines.

OpenAI's infrastructure adds another layer of protection. OpenAI ensures businesses retain ownership and control over their data while meeting compliance requirements. Key security features include AES-256 encryption for data at rest, TLS 1.2+ for data in transit, and SOC 2 audits to uphold industry standards. For organizations under strict regulations, OpenAI offers Data Processing Addendums (DPA) to meet GDPR compliance.

Experts emphasize the importance of strong security practices:

"Implement strong data processing agreements with all vendors. This isn't optional – we've seen organizations face penalties because they assumed their cloud provider handled compliance."

- Randy Bryan, Owner of tekRESCUE

"To ensure your chatbot operates ethically and legally, focus on data minimization, implement strong encryption, and provide clear opt-in mechanisms for data collection and use."

- Steve Mills, Chief AI Ethics Officer, Boston Consulting Group

"Create transparent user interfaces that clearly communicate data practices to users. Both GDPR and CCPA emphasize consent and disclosure – your chatbot should inform users about data collection and provide clear opt-out mechanisms."

- Chongwei Chen, President & CEO of DataNumen

Customizing and Embedding Chatbots

Once security and scalability are in place, customization takes center stage in creating engaging chatbot experiences. A well-designed chatbot goes beyond basic functionality, aligning with your brand and meeting user needs.

- Brand Alignment: Tailor the chatbot's tone and language to reflect your brand's personality. For instance, a financial services chatbot might use a formal tone, while an e-commerce chatbot could opt for a friendly, conversational style. Consistency in tone reinforces your brand identity across customer interactions.

- Training with Business-Specific Data: Incorporate resources like product documentation, FAQs, and company policies to train your chatbot for accurate, relevant responses. Regular updates based on user feedback ensure the chatbot evolves alongside your business.

- Strategic Placement: Position chatbots strategically to maximize engagement. For example, place them on your homepage for general inquiries, product pages for specific questions, support pages for troubleshooting, and checkout pages to help reduce cart abandonment.

- Integration Options and Performance Monitoring: OpenAssistantGPT offers flexible embedding options, from direct code integration for custom websites to plugins for platforms like WordPress. Monitoring user behavior - such as activity levels, bounce rates, and conversions - provides insights to refine chatbot interactions. Features like automatic triggers based on visitor location or device type further enhance user experiences.

An iterative approach is key to success. Continuously refining conversation flows, addressing edge cases, and testing integration points ensures a smooth and reliable user experience. Thoughtful customization and strategic deployment can turn chatbots into powerful tools for engagement and efficiency.

Conclusion and Key Takeaways

Achieving successful API integration requires a careful balance of security, scalability, fault tolerance, and cost management. This guide has walked through these critical elements, providing strategies that act as a roadmap for organizations aiming to integrate AI capabilities while ensuring their systems remain reliable and robust.

Best Practices Summary

Security is the cornerstone of any enterprise-level API integration. As Rick Hightower wisely notes:

"security isn't a feature to bolt on later; it's a fundamental design principle that should guide every architectural decision".

This involves practices like assigning unique API keys to each team member, storing credentials securely in environment variables, and avoiding the deployment of keys in client-side environments. These measures protect sensitive data and prevent unauthorized access.

Scalability ensures smooth operations even as demand grows. Techniques such as token caching (e.g., using Redis) help reduce authentication overhead, while client-side rate limiting works alongside server controls to maintain performance. Smart load balancing, with features like configurable failure thresholds and priority-based routing, ensures workloads are distributed efficiently across multiple OpenAI instances.

Fault tolerance keeps systems resilient during unexpected issues. Strategies like automatic token refresh, backoff mechanisms for rate limits, and circuit breaker patterns help maintain system availability. Adding redundant deployments further strengthens reliability, especially during peak usage.

Cost management becomes critical as API usage scales. Methods like prompt compression with LLMLingua, streaming responses to reduce latency, and setting generation limits with MAX_TOKENS can help control expenses. For stable workloads, Provisioned Throughput Units (PTUs) may offer better cost efficiency compared to pay-as-you-go pricing.

Next Steps for Implementation

To put these best practices into action, start by prioritizing security. Review your API key management processes, as a compromised key can disrupt operations. Implement regular key rotation procedures and ensure keys are stored securely.

If you're looking for rapid deployment, consider platforms like OpenAssistantGPT. It offers secure and scalable chatbot integration with features such as SAML/SSO authentication, web crawling, and file analysis, all while adhering to the security principles discussed in this guide.

For building more complex workflows, explore orchestration tools like Temporal, Airflow, or AWS Step Functions. These frameworks provide built-in fault tolerance for managing intricate data pipelines, though it’s crucial to weigh their complexity against long-term maintainability.

Finally, adopt a data-driven approach by continuously monitoring usage patterns, response times, and failure rates. This helps identify areas for improvement and ensures your integration remains aligned with evolving business needs.

FAQs

What are the best practices for keeping my OpenAI API keys secure during integration?

To protect your OpenAI API keys, it's best to store them in environment variables rather than embedding them directly in your application's code. Hardcoding keys, especially in client-side code or public repositories, increases the risk of exposing them to unauthorized access. Instead, rely on a server-side application to manage API calls, keeping your keys secure and out of reach.

You can further enhance security by restricting API key usage. Set permissions to control access and limit usage to specific IP addresses or applications. Make it a habit to rotate your keys regularly and keep an eye on their activity to spot any unusual behavior. By following these practices, you can ensure your API keys remain safe and your integration stays secure.

How can I manage costs effectively when using OpenAI's API?

To keep your costs in check when using OpenAI's API, start by focusing on how you use tokens. Crafting well-thought-out prompts and selecting models that align with your specific needs - especially opting for lower-cost models when they fit - can make a big difference in reducing token usage.

You can also save money by batching multiple requests into a single call and caching responses to avoid making repeated API calls unnecessarily. On top of that, setting up automated tools to monitor costs and enforce rate limits can help you avoid unexpected charges. These steps make it easier to build a scalable, budget-friendly solution tailored to your requirements.

How do no-code platforms like OpenAssistantGPT make it easier to integrate AI chatbots?

No-code platforms such as OpenAssistantGPT make it easier than ever to integrate AI chatbots. With their straightforward, user-friendly tools, these platforms remove the need for any coding knowledge. They often include pre-built connectors and automation features, making it simple to link with APIs like OpenAI's and customize them quickly.

By simplifying the process, these platforms help cut down development time, reduce the risk of errors, and allow users to focus on building engaging and functional chatbots instead of wrestling with complicated technical setups. This makes them a smart choice for businesses aiming to roll out AI-powered chatbots with speed and efficiency.