How to build OpenAI agent using the reponses API

How to build OpenAI agent using the reponses API

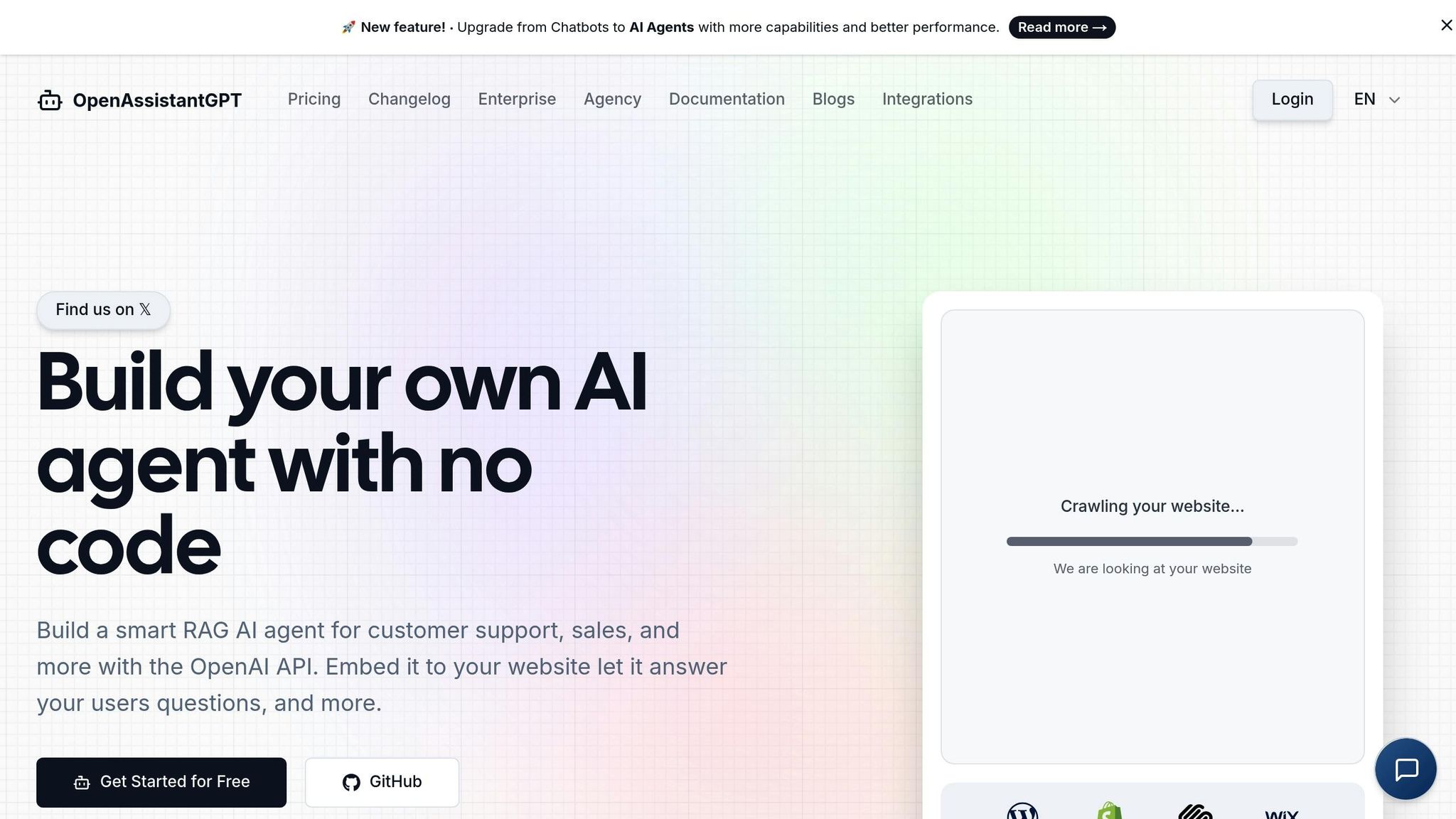

Building an OpenAI agent with the Assistant API is straightforward and doesn’t require coding skills. This guide explains how to create a chatbot using tools like OpenAssistantGPT to provide accurate, real-time responses based on your custom content. Here’s a quick summary:

- Set Up Accounts: Create an OpenAI account with billing and a Google or GitHub account for OpenAssistantGPT access. Generate an API key to link both platforms.

-

Upload Content: Use

.docx,.txt, or.csvfiles to build your chatbot’s knowledge base. Organize files by topic for better accuracy. - Configure the Chatbot: Define its name, behavior, and connect it to your uploaded content. Choose between GPT-3.5 (budget-friendly) or GPT-4 (better for complex queries).

- Optimize Performance: Adjust token limits, temperature settings, and organize files to improve response speed and accuracy.

- Integrate on Your Website: Embed the chatbot using a simple code snippet to make it accessible to users.

How to Use OpenAI's Responses (step-by-step + easter egg)

Step 1: Set Up Accounts and Configure API Keys

To kick off your journey with an OpenAI-powered agent, you'll need to set up two accounts and configure API keys properly. This step ensures your chatbot can tap into OpenAI's language models via OpenAssistantGPT.

Accounts You’ll Need

Before you can start building your AI agent, you’ll need two accounts:

- Google or GitHub Account: This is required to access OpenAssistantGPT's platform, a no-code tool for building your chatbot.

- OpenAI Account: Head over to platform.openai.com to create an account. This is essential because it provides access to the Assistant API, which powers your chatbot. Make sure to set up a valid payment method on this account - OpenAI won’t process message requests without billing in place.

Once these accounts are ready, the next step is to generate an API key to connect OpenAssistantGPT with OpenAI.

Creating and Managing Your OpenAI API Key

To create your API key, open platform.openai.com/api-keys in a new browser tab while keeping your OpenAssistantGPT dashboard open in another.

- Click on "Create new secret key" in the OpenAI dashboard.

- Give the key a clear name, like "OpenAssistantGPT Chatbot", to keep things organized.

- Copy the key as soon as it’s generated - it starts with "sk-" followed by a long string of characters. Remember, this key will only be displayed once.

Next, go to your OpenAssistantGPT dashboard at openassistantgpt.io/dashboard/settings. Paste the API key into the designated field and save your settings. This step connects OpenAssistantGPT to OpenAI’s servers, enabling your chatbot to function.

Important: Keep your API key secure. If someone else gets access to it, they could misuse it, leading to unwanted charges. If you think your key has been compromised, revoke it immediately in your OpenAI dashboard and create a new one.

Step 2: Upload Custom Content for Your Chatbot

Now that your accounts and API keys are ready, it's time to build your chatbot's knowledge base by uploading content. These files will serve as the foundation for your chatbot to deliver accurate and relevant answers to customer questions. Here's how to upload your content and what file types work best.

Supported File Types and Tips for Success

Your chatbot works best with .docx, .txt, or .csv files. To ensure smooth performance:

- Only upload public, non-sensitive information.

- Use high-quality, well-organized content to improve your chatbot's responses.

- If you're starting from scratch, consider pulling content from your website, product guides, or customer service scripts.

- Group similar information into separate files (e.g., by product or service) to make responses more precise.

Once your content is structured and ready, you're set to upload.

How to Upload Files in OpenAssistantGPT

Head over to openassistantgpt.io/dashboard/files in your browser. You can either drag and drop your files or click to select them manually.

After uploading, OpenAssistantGPT processes the content to make it searchable for your chatbot. This process usually takes a few moments, and you'll get a confirmation once it's done. The uploaded file will then appear in your list of files.

Uploading Multiple Files: If you have several files, you can upload them one at a time or in batches. Each file is indexed separately, and you can update or replace them as needed.

Keep in mind that file limits depend on your subscription plan:

- Free Plan: Up to 3 files

- Basic Plan: Up to 27 files

- Pro Plan: Up to 81 files

Once your files are uploaded and processed, they form the knowledge base for your chatbot. In the next step, you'll connect this content to your AI assistant and bring it to life.

Step 3: Create and Configure Your Chatbot

Now that your files are ready, it’s time to set up your chatbot’s personality, connect it to your content, and select the appropriate OpenAI model.

Begin Chatbot Creation

Head over to openassistantgpt.io/dashboard/new/chatbot to access the chatbot creation form. This form is straightforward and only requires a few basic inputs to get started.

Configure Chatbot Settings

Define your chatbot’s identity and behavior by configuring these key settings:

- Display Name and Welcome Message: Choose a clear and friendly display name (e.g., "Customer Support Bot") and craft a welcoming message, such as: "Hi there! I’m here to assist you. What can I help you with today?"

- Default Prompt: This is a behind-the-scenes instruction that guides how the OpenAI model responds. A well-thought-out prompt can help steer conversations, maintain context, handle complex queries, and add a touch of personalization. For instance, you might instruct your chatbot to: "Always reply in a professional yet friendly tone, and ask follow-up questions if the user’s input needs clarification."

- File for Retrieval: Link your knowledge base or content files to the chatbot. This allows it to search through your custom data and provide accurate, content-driven responses.

Once the settings are configured, you’ll need to select the OpenAI model that powers your chatbot.

Choose the Right OpenAI Model

The model you choose will influence your chatbot’s performance, the quality of its responses, and your overall costs. OpenAssistantGPT supports multiple OpenAI models, with GPT-3.5 and GPT-4 being the most commonly used:

- GPT-3.5: A budget-friendly option that’s great for handling simple question-and-answer interactions. It’s perfect for businesses with straightforward needs or limited budgets.

- GPT-4: Ideal for more complex conversations, GPT-4 offers improved performance. It’s particularly adept at managing ambiguous queries, maintaining context during longer chats, and delivering detailed responses. While it’s more expensive per interaction, it’s worth considering for advanced use cases.

You can also tweak the temperature setting to adjust the creativity of responses. A lower temperature ensures factual, concise answers, while a higher setting allows for more varied and creative replies.

When you’re satisfied with the configurations, click Create to launch your chatbot. You’ll find your new chatbot on the dashboard at openassistantgpt.io/dashboard/chatbots, where you can test it using the chat option in the menu.

Once your chatbot is live, the next step is to fine-tune its performance to ensure it meets your needs.

sbb-itb-7a6b5a0

Step 4: Optimize Chatbot Performance

Once your chatbot is up and running, the next step is fine-tuning its performance. By adjusting API settings and applying advanced techniques, you can reduce response delays, improve accuracy, and create a smoother experience for your users. Quick, precise answers keep customers engaged and satisfied.

Reduce Response Time with API Settings

Response speed is largely influenced by the model you use and the number of tokens generated per interaction. Here’s how you can tweak settings to make your chatbot faster:

Adjust Token Limits:

- Keep responses concise by setting a maximum token limit between 150 and 300 for most customer service interactions.

- Ensure your prompt stays under 500 tokens to minimize processing load.

- Review your default prompt and remove any unnecessary instructions or examples.

Optimize Model Selection:

- If advanced reasoning isn’t critical, switch to GPT-3.5 for quicker responses.

- For a balance between speed and quality, use GPT-3.5-turbo.

- Keep in mind that the model you chose in Step 3 directly impacts response time - faster models may trade a bit of accuracy for speed.

Fine-tune Temperature Settings:

- Lower the temperature to 0.3 or below for faster and more consistent responses.

- Higher temperature settings take longer since the model explores more response variations.

These adjustments to API settings can significantly cut down on response lag, but there’s more you can do to boost performance.

Apply Advanced Performance Techniques

To further enhance your chatbot’s efficiency, consider these strategies:

Cache Frequent Queries:

- Use chatbot analytics to identify the most common customer questions.

- Create pre-written responses for the top 10–15 frequently asked questions.

- Update your knowledge base with these optimized answers to streamline responses.

Organize File Structure:

- Break large, bulky files into smaller, topic-specific documents.

- Use clear headings and bullet points to make information easier to retrieve.

- Eliminate redundant or unnecessary details that could slow down processing.

Pre-warm Your Chatbot:

- Test your chatbot with sample questions after making configuration changes.

- Send a few test queries during off-peak hours to ensure everything runs smoothly.

- Regularly update content files to maintain both speed and accuracy.

Step 5: Add Your Chatbot to Your Website

Now that your chatbot is fine-tuned and ready, it’s time to make it accessible to your website visitors. This step transitions your chatbot from a testing phase to a fully operational tool that can assist users around the clock.

Get the Embed Code

The first thing you’ll need is your chatbot’s embed code, which you can find on the OpenAssistantGPT dashboard. This code, usually structured as an IFRAME, includes parameters that let you customize how the chatbot looks and behaves.

To locate it, log in to your OpenAssistantGPT dashboard, find your chatbot in the list, and navigate to the "Deployment" section in its settings. There, you’ll see your unique embed code, complete with options for customization. For example, you can configure client-side prompts directly in the IFRAME URL:

<iframe src="https://www.openassistantgpt.io/embed/1234123/window?chatbox=false&clientSidePrompt=You are currently talking to {User X} help him to understand the book {Book Name X}." ...></iframe>

Copy this code and make note of any parameters you want to adjust, such as the chatbot’s position on the page, its appearance, or the initial welcome message. Once you’ve finalized your customizations, you’re ready to add the chatbot to your site.

Embed the Chatbot on Your Website

With your embed code in hand, integrating the chatbot into your website is straightforward. Simply paste the code into your site’s HTML, placing it just before the closing </body> tag. This placement ensures the chatbot loads properly without interfering with other elements on the page.

If you’re using a website builder, look for its custom HTML feature to insert the code. Avoid wrapping the embed code in <div> tags with custom CSS, as this can disrupt the chatbot’s display and responsiveness.

After adding the code, save your changes and preview your website. You should see the chatbot button, usually located in the bottom-right corner unless you’ve customized its position. Click the button to test the chat window, ensuring it opens and functions as expected.

Final Testing

Before going live, test the chatbot thoroughly. Ask a few sample questions to confirm it retrieves information correctly from your uploaded files. Make sure the chatbot looks good and works seamlessly on both desktop and mobile devices - its responsive design should automatically adjust for smaller screens.

If you want the chatbot to appear on every page of your site, add the embed code to your site’s template or master page. This way, you won’t need to insert the code on individual pages.

Once everything checks out, your chatbot is ready to provide support to your visitors, no matter where they are or what device they’re using.

Conclusion: Your AI Chatbot is Ready

Your AI-powered chatbot, built with the responses API, is now live on your website and ready to assist customers around the clock. It’s equipped to answer questions about your products, services, or policies with precision, drawing directly from your custom knowledge base.

To ensure your chatbot stays accurate and helpful, make it a habit to check your dashboard regularly. If you notice recurring questions that it struggles to answer, that’s your cue to expand or refine your knowledge base. Adding new files or updating existing ones as your business grows will help the chatbot keep up with evolving customer needs.

The API configurations you’ve implemented ensure fast, consistent responses, even during busy periods. If you experience surges in traffic, tweaking the response settings can help maintain optimal performance.

Keeping your content up to date is key to maintaining the chatbot’s reliability. A few minutes of regular maintenance can go a long way in ensuring your customers always receive accurate and timely information.

Now, with your chatbot handling routine inquiries, your team can focus on more complex tasks. Plus, with OpenAssistantGPT's unlimited messaging on paid plans, your chatbot can scale effortlessly as your business grows - no extra costs per message.

FAQs

What’s the difference between GPT-3.5 and GPT-4, and how do I choose the right one for my chatbot?

GPT-4 takes a step beyond GPT-3.5, delivering improved abilities to handle more intricate conversations and tasks. One standout feature is its expanded context window, which can manage up to 128,000 tokens, a significant leap from GPT-3.5’s 16,000 tokens. This makes GPT-4 well-suited for tasks involving lengthy discussions or processing large datasets. However, it does come with trade-offs, such as slower response times and higher operational costs.

If your chatbot needs to tackle detailed reasoning, solve complex problems, or manage extensive context effectively, GPT-4 is the way to go. On the other hand, for straightforward interactions or when speed and cost-efficiency are priorities, GPT-3.5 often gets the job done. The right choice depends on your project’s complexity, required response speed, and budget constraints.

How can I keep my OpenAI API key secure, and what steps should I take if it’s compromised?

To ensure the security of your OpenAI API key, it’s crucial to store it in a safe place, like an environment variable, and avoid including it in client-side code. Make it a habit to rotate your keys periodically and keep an eye on their activity to catch any unusual behavior. Under no circumstances should you share your API key publicly or in unsecured locations.

If you suspect your API key has been compromised, act quickly. Revoke the key through your OpenAI account dashboard, generate a new one, and update your environment variables or application settings with the replacement. Prompt action minimizes the risk of unauthorized access to your account and API.

How can I improve my chatbot's performance and reduce response times?

To get the most out of your chatbot and reduce response times, start by fine-tuning your API settings and selecting the best OpenAI model for your specific needs. For faster responses without sacrificing too much quality, consider using smaller models like gpt-3.5-turbo.

Another way to boost efficiency is by caching frequently used responses, which cuts down on repetitive processing. Additionally, refine your prompts to make them more direct and focused, ensuring smoother interactions. Keep an eye on your chatbot's performance through analytics tools to spot and resolve any slowdowns or inefficiencies.

Finally, organize your custom content thoughtfully and keep it relevant. This enables the AI to retrieve information more quickly, delivering accurate and helpful answers to your users.