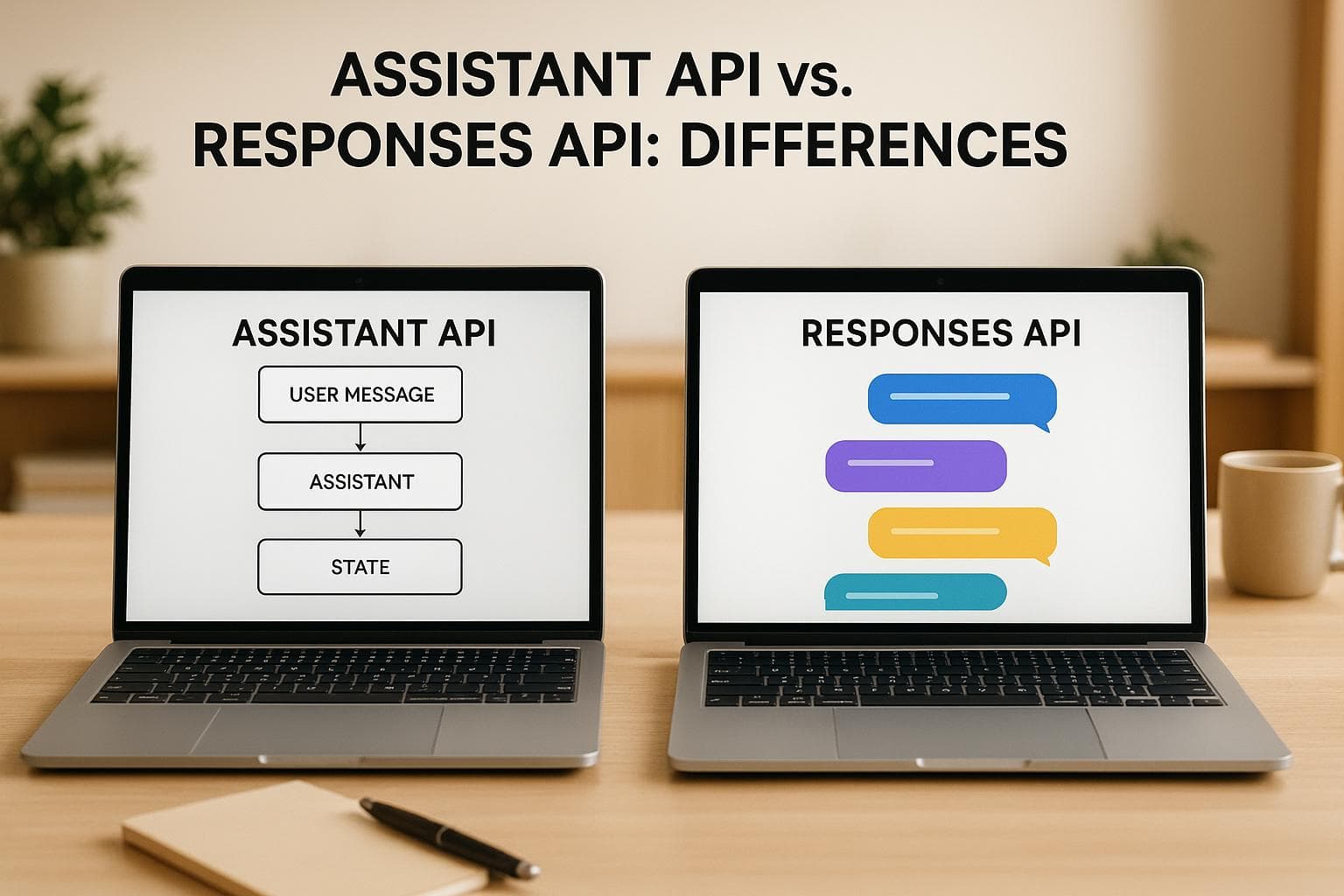

Assistant API vs. Responses API: Differences

Assistant API vs. Responses API: Differences

OpenAI is transitioning from the Assistant API to the Responses API by mid-2026. Here’s what you need to know:

- Assistant API: Focuses on creating AI assistants with memory and thread management. Ideal for projects requiring long-term conversational context and detailed customization.

- Responses API: Designed for speed and simplicity, it automates state management and supports real-time interactions. Best for dynamic, fast-response chatbots with less complexity.

Key Differences:

- State Management: Assistant API requires manual thread handling; Responses API automates this process.

- Performance: Responses API is faster and simpler; Assistant API offers more control but is slower.

- Integration: Responses API simplifies setup, while Assistant API demands more technical effort.

- Tool Usage: Both support tools, but Responses API integrates them more directly.

Plan for the migration now to avoid disruptions, especially if you rely on the Assistant API. For no-code users, platforms like OpenAssistantGPT simplify the transition.

OpenAI Just Changed Everything (Responses API Walkthrough)

Main Differences Between Assistant API and Responses API

Assistant API and Responses API differ in several key areas, shaping how developers choose the right tool for their projects.

State Management

One major distinction lies in how these APIs handle conversation state and memory. The Assistant API relies on explicit thread management. This means developers need to create and manage separate thread objects to track conversations, coordinating assistants, threads, messages, and runs to maintain context. On the other hand, the Responses API simplifies this process by reducing the complexity of state management. It handles conversation history more seamlessly, eliminating the need to juggle multiple objects.

For platforms like OpenAssistantGPT, these differences affect how chatbots maintain user context and conversation history across interactions, influencing the choice of API based on the desired level of complexity and integration.

Tools and Features

Both APIs provide robust tools but through different approaches. The Assistant API includes features like Code Interpreter, File Search, and Function Calling, with the ability to use tools in parallel. It also offers a no-code Playground for easy configuration.

In contrast, the Responses API provides built-in capabilities such as web search, file search, and computer use. However, it lacks a visual configuration interface.

"The Assistants playground was really really useful – especially when we were first developing our solution. And there is no equivalent now for Responses."

- kduffie, OpenAI Developer Community User

Additionally, the Responses API introduces features like batch uploads and metadata support for vector store files, which enhance its functionality.

Performance and Speed

Performance is another area where developers have noted clear differences. The Responses API prioritizes speed and simplicity, making interactions faster and reducing complexity. Many developers have described its structure as "cleaner and better overall".

The Assistant API, while powerful, requires more intricate management of conversation states, threads, and tool integrations. Some developers have found this complexity manageable, describing it as "fairly convenient" for mapping assistants, threads, and messages. However, this added layer of detail can slow down response times.

"The overarching feedback was that while powerful, the Assistants API needed a more intuitive solution that streamlined interactions and managed states automatically."

- Zack Saadioui, Arsturn

These differences in speed and structure often influence which API developers choose for their projects.

Data Storage and Privacy

The way these APIs handle data storage also sets them apart, especially for applications with strict privacy requirements. The Assistant API uses persistent thread objects to maintain conversation history across multiple API calls. While this ensures continuity, it also requires careful management of data retention. In contrast, the Responses API offers greater flexibility, allowing developers more control over how and where conversation data is stored. This can be a significant advantage for applications that prioritize privacy.

Integration Process

Integration complexity varies significantly between the two APIs. The Assistant API requires developers to manage relationships between assistants, threads, messages, and runs. This offers detailed control but comes with a more involved setup process. By comparison, the Responses API simplifies integration by reducing the number of objects developers need to handle. Its straightforward instructions make migration easier. OpenAI has also stated that the Responses API will eventually match the features of the Assistant API.

It’s worth noting the pricing difference: the Responses API charges $2.50 per 1,000 file_search calls, which is about 50% higher for heavy usage.

When to Use Each API

Choosing between the Assistant API and the Responses API depends on what your chatbot needs to accomplish. Each API shines in different scenarios, depending on the complexity of the chatbot, the required features, and your development goals.

Best Uses for Assistant API

The Assistant API is ideal for chatbots that require long-term conversational memory and consistent behavior across extended interactions. If your project involves maintaining detailed conversation history, the Assistant API offers structured thread management to handle that complexity effectively.

For enterprise-level chatbots, especially those that need to manage detailed user profiles or track ongoing projects, the Assistant API’s multi-object integration provides the necessary tools. This makes it a great choice for bots that rely on accumulated conversation data to provide tailored responses or updates.

It’s also a strong option for specialized assistants with predefined roles. For example, if you’re building a technical support bot or an educational tutor, the Assistant API lets you configure the bot’s persona and tools upfront, ensuring consistent and reliable behavior.

Additionally, its structured state management is particularly helpful for processes that require sensitive tracking, although this feature is slated to be deprecated by mid-2026.

Best Uses for Responses API

The Responses API is built for dynamic, real-time interactions and focuses on speed and simplicity.

This API is perfect for projects that prioritize efficiency and adaptability. Since OpenAI has positioned it as the long-term standard for AI agents, starting with the Responses API can save you from needing to migrate your chatbot later.

For real-time customer service chatbots, the Responses API is a better fit. Its streamlined design prioritizes quick response times, making it ideal when speed is more critical than maintaining a detailed conversation history.

If your chatbot needs to use dynamic tools - like retrieving real-time information or performing calculations - the Responses API provides built-in functionalities to handle these tasks. For OpenAssistantGPT users creating bots that need to search for the latest data or process multiple types of input (like text, images, or files), the Responses API eliminates the need for complex custom integrations.

Chatbots that handle multi-modal inputs, such as analyzing uploaded documents or processing images, also benefit from the Responses API’s enhanced input capabilities. This makes it a go-to choice for bots designed to manage diverse file types simultaneously.

For developers working on lead generation, customer inquiries, or website support, the Responses API simplifies state management while maintaining smooth conversation flow. Its optional server-side memory reduces development complexity, making it easier to implement and maintain.

Lastly, the Responses API is a great fit for prototypes and rapid development. Its single API call structure allows for faster testing and iteration compared to the multi-step process required by the Assistant API. This makes it a valuable tool for quickly showcasing chatbot functionality.

sbb-itb-7a6b5a0

How to Switch from Assistant API to Responses API

Switching from the Assistant API to the Responses API requires careful planning to ensure your chatbot continues to function smoothly. This process involves understanding the differences between the two APIs, updating your code, and thoroughly testing everything before deployment.

Timeline and Support

Developers have until mid-2026 to complete the migration. OpenAI provides detailed guides and community support through its documentation and Developer Community forum to assist with the transition.

For new projects, it’s recommended to start directly with the Responses API. Existing implementations, however, should be migrated well before the sunset date. Starting early gives you ample time to test and deploy changes without disruption.

Migration Steps

Begin by assessing your chatbot’s current setup and identifying the key Assistant API functions it relies on most heavily. This understanding is crucial as the Responses API introduces significant changes, such as automating state management, which eliminates the need for manually tracking conversation history.

You’ll need to refactor your code to use conversation.id for maintaining persistent state and supporting simultaneous tool calls. One major advantage of the Responses API is its ability to streamline message handling, allowing for easier chaining and bulk sending of messages. This reduces the amount of boilerplate code required to manage conversation threads.

In August 2025, a developer named kachari.bikram42 shared a detailed Python example on the OpenAI Developer Community forum. This example demonstrated migration techniques using the AsyncOpenAI client and AsyncConversations. It also covered loading custom tools like OpenAIWebSearchTool and managing conversation flow with conversation.id. The code included functions such as initiate_streaming_conversation, __handle_function_calls, __submit_tool_outputs, and __process_streaming_events to manage interactions and tool execution.

Once you’ve refactored your code, perform thorough unit and manual testing to ensure everything works as expected. This preparation will help you achieve a smooth migration experience, especially when deploying on platforms like OpenAssistantGPT.

How OpenAssistantGPT Makes Migration Easier

For those using OpenAssistantGPT, the migration process becomes much simpler. The platform is designed to handle much of the technical complexity for you. Since OpenAssistantGPT is built to work seamlessly with OpenAI's APIs, it automatically manages the transition behind the scenes.

With OpenAssistantGPT, you don’t need to worry about refactoring code or manually managing conversation states. The platform’s no-code interface abstracts these technical details, letting you focus on your chatbot’s functionality rather than the underlying API architecture. Automatic updates ensure your chatbot continues to operate without interruptions as OpenAI shifts from the Assistant API to the Responses API.

Organizations using OpenAssistantGPT’s Enterprise plan benefit even further. Features like SAML/SSO authentication remain fully functional during the migration, with no additional configuration needed. The platform’s SLA guarantees ensure your chatbot stays operational throughout the transition.

This managed approach allows you to leverage the Responses API’s improved performance and streamlined architecture without diving into technical complexities. Features like dynamic tool integration and faster response times are automatically enabled as the platform adopts the new API. For businesses relying on chatbots for tasks like lead generation, customer support, or website integration, OpenAssistantGPT ensures continuity and efficiency without requiring extra technical resources.

Pros and Cons of Each API

When deciding between the Assistant API and the Responses API, it’s important to weigh their strengths and limitations against your project’s specific needs. Each API serves different purposes, so the right choice depends on factors like project complexity, performance requirements, and long-term maintenance.

The Assistant API is a powerful tool for projects that demand precise control over conversation flow. It allows developers to manually manage conversation threads and states, offering a high level of customization. However, this control comes with added complexity, requiring more coding effort and technical expertise to handle aspects like error management and state persistence.

On the other hand, the Responses API simplifies development by automating state management and message processing. This reduces the need for manual coding, making it easier and faster to build functional chatbots. While it doesn’t offer the same granular control as the Assistant API, it excels in efficiency, especially when managing multiple conversations. Its streamlined approach minimizes the time and effort required for development and maintenance.

For projects with tight timelines or limited resources, the Responses API is a practical choice due to its automation and ease of use. However, if your project requires detailed customization or predictable manual control, the Assistant API might be a better fit, even though it demands more effort and expertise.

Cost is another factor to consider. The Assistant API’s manual processes may lead to higher development and maintenance expenses, while the Responses API’s efficiency can help reduce these costs over the long term. Ultimately, your decision should align with your project’s technical requirements, timeline, and priorities.

Feature Comparison Table

| Feature | Assistant API | Responses API |

|---|---|---|

| State Management | Manual implementation required | Automated with conversation.id |

| Development Complexity | High – requires custom coding | Low – streamlined implementation |

| Performance | Variable based on implementation | Optimized and consistent |

| Tool Integration | Manual setup and management | Simplified tool calling |

| Learning Curve | Steep for beginners | Moderate for most developers |

| Customization Level | High granular control | Balanced automation with flexibility |

| Response Speed | Depends on implementation | Generally faster |

| Resource Usage | Higher due to manual processes | More efficient automated handling |

Conclusion: Which API Should You Choose?

After breaking down the features, state management, and integration options, here’s how to decide which API fits your project best.

Your choice should depend on your project’s requirements, your team’s expertise, and your overall goals. If your project calls for extensive customization and precise control over chatbot interactions, the Assistant API is the way to go. It’s particularly useful for teams with strong development skills, as it allows for custom logic and tailored conversation flows, giving you the flexibility to design specialized chatbots.

On the other hand, if you’re looking for a quick and simple solution without diving into complex coding, OpenAssistantGPT is a great option. Built to work seamlessly with OpenAI’s Assistant API, this no-code platform lets you create custom chatbots in just minutes. With only a few lines of code, you can embed these chatbots on your website. Whether you need a small widget or a full-screen implementation, OpenAssistantGPT provides flexible embedding options to match your website’s design. Plus, the platform offers a free tier with up to 500 messages per month, giving you the chance to test and refine your chatbot concept before committing to a larger plan.

"I have built a SaaS platform designed to create chatbots using the Assistant API. The tool is called OpenAssistantGPT, and you can easily build a chatbot with custom content for any website. In just a few minutes, you can create your chatbot using our app." - marcolivierbouch

FAQs

What factors should developers consider when choosing between the Assistant API and the Responses API for their chatbot projects?

When choosing between the Assistant API and the Responses API, it’s important to consider the level of complexity and customization your project demands.

The Responses API shines when flexibility is a priority. It allows for detailed response customization and simplifies the handling of conversation history. This makes it a strong choice for developers building advanced or highly personalized chatbot applications.

On the flip side, the Assistant API provides a more streamlined and user-friendly setup, which works well for simpler projects or those needing quick deployment. That said, integrating external data sources or managing complex contexts may require extra effort. For new projects, the Responses API stands out as a forward-thinking option, especially with its regular updates and migration tools.

What changes should I expect in user data handling and privacy when switching from the Assistant API to the Responses API, especially for applications with strict data retention requirements?

Switching from the Assistant API to the Responses API brings notable changes in how user data is managed and stored. With the Responses API, conversation data is typically kept for 30 days. This retention period is primarily aimed at improving services, enforcing policies, and detecting abuse. For applications with strict privacy requirements, this defined timeframe offers a clear and predictable structure.

On the other hand, the Assistant API doesn't have as clearly outlined data retention policies. While this might allow for more flexible handling of data, it could also necessitate extra configuration to meet rigorous privacy standards. For businesses focused on compliance, the Responses API's structured approach to data retention could provide added reassurance.

How can developers smoothly transition from the Assistant API to the Responses API before the August 2026 deadline?

To make the migration process as seamless as possible, begin by diving into the official migration documentation. This will give you a clear understanding of the steps and adjustments required. After that, set up a dedicated testing environment where you can fine-tune workflows, update file-handling procedures, and align with the new API structure. Be sure to examine any changes in tool integration and overall functionality.

Once you've made the necessary updates, conduct extensive testing to verify both stability and performance. When you're confident everything is running smoothly, start transitioning production workloads gradually, keeping a close eye on potential issues. Completing these steps well ahead of the August 2026 deadline will help you avoid disruptions and stay compliant.