Best Open-Source Tools for Chatbot Testing

Best Open-Source Tools for Chatbot Testing

Chatbots are everywhere, but without proper testing, they can fail - badly. Whether you're fixing typos, ensuring smooth conversations, or preventing bias, open-source tools make chatbot testing easier and more affordable. Here’s what you need to know:

- Why Testing Matters: Poorly tested chatbots frustrate users. Testing ensures accuracy, consistency, and reliability.

- Top Tools:

- Botium: Test over 55 platforms with ease.

- TestMyBot: Focus on regression testing and multi-channel support.

- Rasa Testing Suite: Validate conversation flows and NLU performance.

- LangTest: Check for bias, robustness, and unexpected inputs.

- HEval: Add human insights for emotional and conversational quality.

- OpenAssistantGPT SDK: Perfect for deployment and integration testing.

Quick Comparison

| Tool | Best For | Key Features |

|---|---|---|

| Botium | Functional & Performance Testing | No-code interface, GDPR compliance, NLP analytics |

| TestMyBot | Regression Testing | Multi-channel support, CI/CD integration |

| Rasa Testing Suite | NLU & Conversation Flow | End-to-end testing, detailed performance metrics |

| LangTest | Robustness & Bias Detection | Adversarial input testing, bias analysis, NLP integration |

| HEval | Human-Centric Testing | Crowdsourced feedback, emotional tone evaluation |

| OpenAssistantGPT SDK | Deployment Testing | Multi-model support, custom domain validation, authentication testing |

Testing tools like these can save you time, money, and headaches. Dive into the full list to find the perfect match for your chatbot project.

Mastering LLM Chatbot Testing: Metrics, Methods and Mistakes to Avoid | James Massa | #Testflix 2024

Top Open-Source Tools for Chatbot Testing

The open-source community offers a variety of tools to tackle the challenges of chatbot testing. These tools help ensure everything from basic functionality to complex conversation flows works seamlessly, enabling development teams to deliver reliable chatbot performance without exceeding their budgets. Below are some standout open-source tools that simplify chatbot testing, covering functional checks and advanced scenario validations.

Botium

Botium is one of the most widely used open-source frameworks for chatbot testing, offering support for over 55 conversational AI technologies. Its no-code approach makes it accessible to both technical and non-technical users, and it excels in functional testing, performance evaluations, and security checks, including GDPR compliance. Botium also simulates realistic user behaviors, such as typos and slang, and includes integrated NLP analytics to identify areas for improvement.

In addition to its core capabilities, Botium's functionality is extended by TestMyBot, which focuses on regression testing.

TestMyBot

Built on Botium's framework, TestMyBot emphasizes regression testing and automated quality assurance. According to its creators:

"TestMyBot is a test automation framework for your chatbot project. It is unopinionated and completely agnostic about any involved development tools. Best of all, it's free and open source."

Florian Treml, a key contributor to the tool, shared his vision: "My vision for TestMyBot is 'to be the Appium for chatbots'". TestMyBot captures and replays real conversation scenarios, ensuring thorough testing cycles. Its multi-channel support allows developers to test chatbot performance across platforms like Facebook Messenger, Slack, and web interfaces. With seamless CI/CD integration, it helps detect issues early in the development process.

For developers seeking more control over advanced chatbot interactions, the Rasa Testing Suite offers a tailored approach.

Rasa Testing Suite

The Rasa Testing Suite is designed to validate conversation flows and benchmark natural language understanding (NLU) performance. It automates entire multi-turn conversation scenarios using end-to-end tests, ensuring smooth and accurate interactions. Rasa also provides detailed performance metrics, such as accuracy, response times, and conversation completion rates. These insights not only demonstrate chatbot effectiveness but also help guide ongoing improvements. This tool is particularly suited for developers managing complex, AI-driven chatbot projects.

Specialized Tools for Advanced Testing Needs

While general testing frameworks handle basic functionality, tackling advanced challenges like model robustness, bias detection, and adversarial input requires more specialized tools. With around 80% of enterprises using language models and 79% incorporating AI-augmented testing tools, it's clear that traditional testing methods fall short for modern AI-driven chatbots. Specialized tools fill this gap by addressing unique scenarios that general frameworks often miss.

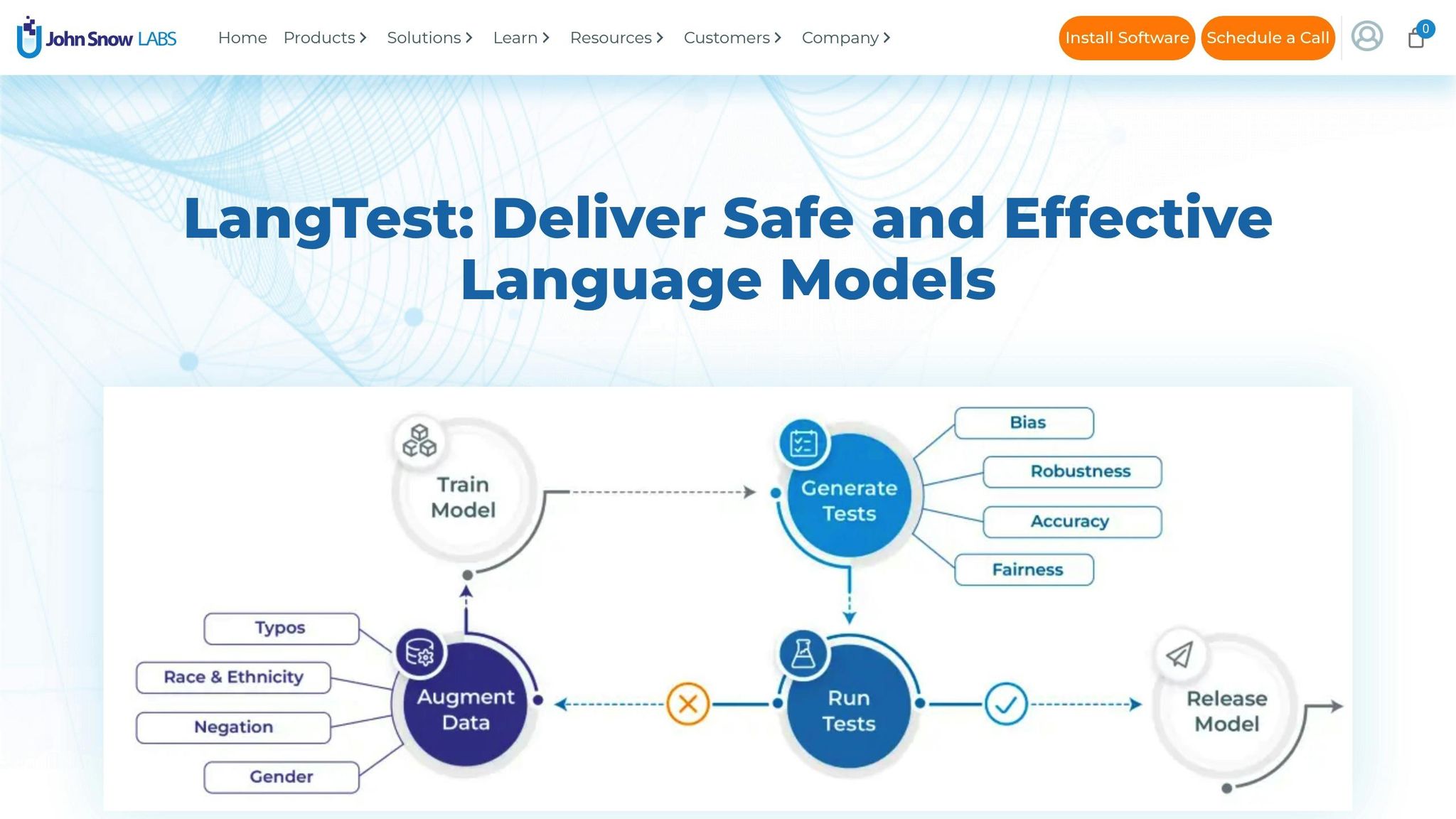

LangTest

LangTest is an open-source Python library tailored for evaluating the robustness, bias, fairness, and accuracy of NLP foundation models. Unlike broader frameworks, LangTest zeroes in on the evaluation phase with a detailed suite of tests designed to simulate real-world adversarial conditions. Its standout feature is the ability to make controlled changes to input data - like adding typos, changing text casing, or rephrasing sentences - to see how well a chatbot handles unexpected variations.

In January 2025, Databricks used LangTest to assess the GPT-4o model's performance in question-answering tasks. The evaluation included scenarios such as typos, OCR errors, slang, and uppercase text, with a minimum pass rate set at 0.8. LangTest's test harness generated diverse input perturbations, ran extensive robustness checks, and delivered detailed performance reports.

What truly sets LangTest apart is its bias detection functionality. By analyzing model outputs, it identifies and measures biases across various demographics and contexts, helping ensure fair and unbiased AI. It also integrates smoothly with popular NLP tools like Hugging Face Transformers, John Snow Labs, Spacy, and Langchain, making it a practical choice for teams already using these ecosystems. A case study highlighted its impact: when testing a Named Entity Recognition (NER) model, LangTest revealed that the model failed over half its cases, including simple ones like handling lowercase inputs. After retraining with augmented data based on these findings, the model's performance improved dramatically.

HEval

HEval adopts a human-centric approach to testing, addressing gaps that automated systems might overlook. While automation scales well, it can miss nuances like conversational flow or emotional intelligence. HEval bridges this gap by combining automation with human-in-the-loop evaluations. Its crowdsourced workflows provide valuable insights into user experience, emotional tone, and conversational quality.

HEval translates subjective human feedback into actionable metrics, capturing aspects like user satisfaction and conversational naturalness. Its continuous feedback loops ensure that user insights directly influence chatbot refinements. However, HEval requires more setup effort than fully automated tools, as it involves creating manual evaluation workflows and coordinating human evaluators - factors that can slow down fast-paced development cycles.

Both LangTest and HEval incorporate adversarial testing methods to identify vulnerabilities before they can affect users.

Google CEO Sundar Pichai has acknowledged the challenges in this area:

"Hallucination is still an unsolved problem. In some ways, it's an inherent feature. It's what makes these models very creative (...) But LLMs aren't necessarily the best approach to always get at factuality."

This ongoing challenge underscores the importance of specialized tools like LangTest and HEval. They offer the advanced capabilities needed to tackle complex issues that general frameworks can't effectively address, ensuring more reliable chatbot experiences.

sbb-itb-7a6b5a0

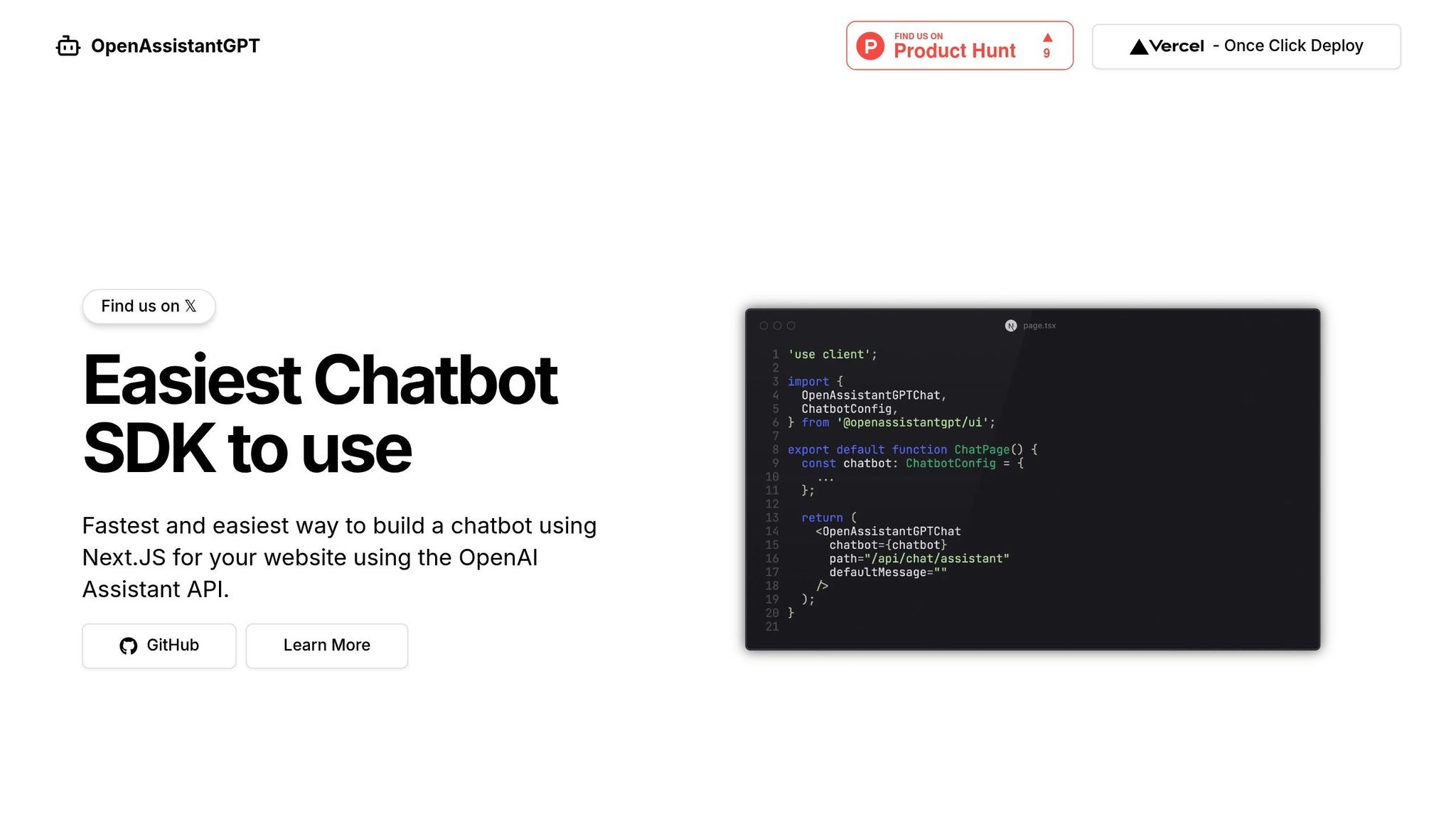

OpenAssistantGPT SDK for Deployment Testing

When it comes to ensuring chatbot performance before going live, deployment testing is a non-negotiable step. The OpenAssistantGPT SDK offers a streamlined solution for deploying GPT-powered chatbots using the OpenAI Assistant API. With over 4,000 users already relying on it, this tool has shown its value in practical deployment scenarios.

Built on NextJS and optimized for Vercel, the SDK fits seamlessly into modern web development workflows. This makes it easier for teams to integrate chatbot testing directly into their deployment pipelines, ensuring every update is thoroughly vetted before it reaches users.

Key Features of OpenAssistantGPT SDK

As an open-source tool, the SDK offers transparency and flexibility, allowing teams to adapt it to their specific testing needs. Some standout features include:

- Custom Domain Validation: Test chatbot performance across different domains and hosting environments to identify issues before full-scale deployment.

- Authentication Flow Testing: With support for SAML/SSO, the SDK ensures secure access controls for private chatbot deployments.

- Advanced Integration Options: From web crawling to file analysis and API endpoint querying via AI Agent Actions, the SDK validates both basic and complex chatbot functionalities.

- Multi-Model Support: The platform supports GPT-4, GPT-3.5, and GPT-4o, enabling side-by-side comparisons of different AI models during testing.

Deployment Testing Use Cases

The OpenAssistantGPT SDK is particularly useful in a variety of deployment scenarios:

- Private Chatbot Deployments: Test authentication mechanisms to ensure only authorized users can access sensitive AI tools.

- Lead Generation: Validate workflows for capturing user inquiries and contact details, ensuring no potential lead slips through the cracks.

- Cross-Platform Compatibility: Embed chatbots into diverse web environments while maintaining a consistent user experience across platforms.

- Enterprise Scalability: For organizations managing multiple chatbots, the enterprise tier supports unlimited bots, crawlers, and custom domains, making it easier to uphold rigorous testing standards.

Businesses using the SDK have reported a 35% drop in support tickets and a 60% faster resolution time, resulting in average annual savings of $25,000. These insights highlight how effective deployment testing can help refine CI/CD workflows and improve test case design.

Best Practices for Implementing Chatbot Testing Tools

To get the most out of open-source chatbot testing tools, it's crucial to integrate them into your workflow and design test cases that closely mimic real-world user interactions.

CI/CD Integration

In chatbot development, continuous testing is essential. By embedding automated tests into your CI/CD pipelines, you can catch potential issues early in the development process. This ensures that any problems in the chatbot's functionality are identified before they make it to production. Plus, with every code change, developers receive immediate feedback on the chatbot's performance.

One standout example of CI/CD integration comes from a team that used Jenkins alongside Python and the OpenAI GPT-3 API. They set up a pipeline to automatically validate chatbot responses against expected outputs. Each commit triggered comprehensive tests, with results recorded for easy review.

Breaking down features into smaller, testable components can make it easier to pinpoint problems and simplify debugging. Parallel testing is another game-changer - it can significantly cut down testing time while still covering all necessary functionality. Many teams have seen their testing cycles reduced by half without sacrificing thoroughness. Automating the deployment of services to development environments after tests pass ensures a seamless workflow and helps catch issues early in the process.

To add an extra layer of security, running smoke tests after every deployment ensures that the chatbot's core functionality remains intact, even after minor updates. This is particularly useful for teams managing multiple chatbot versions across different environments.

By integrating these practices, you can create test cases that better reflect real-world conversational scenarios.

Designing Effective Test Cases

With CI/CD workflows in place, the next step is to design test cases that simulate user interactions as accurately as possible. These test cases should align with both your chatbot's capabilities and actual user behavior.

A great example comes from SourceFuse, where a QA team worked with a client offering AI chatbot solutions for local governments. They used an Excel-based test replicator and ChatGPT to generate diverse queries, which reduced chatbot verification time by over 50% and doubled their sprint output [SourceFuse Case Study, 2025].

When designing test cases, it's important to include a mix of scenarios:

- Common queries that users frequently ask.

- Complex inquiries that challenge the chatbot's logic.

- Edge cases like misspellings, industry jargon, or unexpected inputs.

Since about 40% of users report negative experiences with chatbots due to poor handling of unexpected inputs, testing for these scenarios is critical. Test cases should also simulate complete user journeys, not just isolated interactions. This means verifying how the chatbot handles topic changes, maintains context across multiple exchanges, and recovers when conversations go off track.

Performance testing is another key area. By evaluating response times under varying traffic conditions, you can ensure your chatbot remains responsive even during peak usage periods. This is especially important given that 68% of consumers value quick responses from chatbots.

Error handling is equally important. Testing how the chatbot deals with invalid or confusing inputs helps ensure it provides helpful feedback or transfers users to human support when necessary. Additionally, these scenarios should assess how the chatbot responds to frustrated or confused users, as this can significantly influence the overall user experience.

Cross-platform testing is essential to confirm consistent functionality across websites, mobile apps, and social media platforms. Each platform may introduce unique challenges, so specific test scenarios are necessary to validate performance.

Finally, the best test cases are based on real user interactions. Using anonymized real-world data provides authentic scenarios that reflect how people actually communicate, including informal language, abbreviations, and context shifts that synthetic data often overlooks. Regularly updating this data helps ensure your test cases stay relevant as user behavior and language evolve.

Conclusion

After exploring a range of tools and strategies, one thing is clear: effective testing is the backbone of high-performing chatbots. The tools we've discussed, from Botium's extensive capabilities to the OpenAssistantGPT SDK, provide powerful options for validating chatbot functionality - without the hefty price tags of proprietary solutions.

Consider this: companies can cut QA costs by up to 93%, and the conversational AI market is expected to jump from $4.8 billion in 2020 to $13.9 billion by 2025. With chatbot usage skyrocketing by over 92% since 2019, having a well-thought-out testing strategy is no longer optional - it's essential to remain competitive.

Open-source tools, in particular, stand out for their flexibility. They give development teams full control over testing processes, enabling them to refine chatbot performance continuously. By offering access to the codebase and the ability to customize features, these tools empower teams to adapt and improve their bots over time.

Key Takeaways

Here’s a quick recap of the most important points:

- Define your testing needs early. Understand what functionalities you require and how you’ll use the tools. This initial planning saves time and ensures your solutions align with project goals.

- Explore Botium and OpenAssistantGPT SDK. Botium supports testing across 55+ conversational AI platforms, while the OpenAssistantGPT SDK is ideal for deployment testing.

- Evaluate community support and documentation. Active forums and resources like Discord channels are invaluable for troubleshooting and learning.

- Experiment with multiple tools. Testing different open-source options helps you identify the best fit for your specific needs. Many tools offer detailed documentation and examples to guide your evaluation process.

- Integrate testing into your CI/CD pipeline. Designing realistic test cases and automating testing workflows not only boosts performance but also builds user trust. Thorough testing leads to happier users and lower support costs.

FAQs

What should I consider when choosing an open-source tool for chatbot testing?

When you're choosing an open-source tool for chatbot testing, the first step is to pinpoint what you need. Look for features like automation capabilities, multi-platform support, and performance testing. Automation can be a game-changer, especially for tasks like regression testing. It helps you save time by simulating user interactions and handling repetitive test cases efficiently.

Make sure the tool works across multiple platforms to ensure your chatbot provides a consistent experience, no matter where it's used. This is key to meeting customer expectations and maintaining reliability. Also, take the time to set clear testing goals and map out a detailed plan that includes specific scenarios and expected results. A well-structured approach like this not only ensures thorough testing but also helps you create a chatbot that's more reliable and effective.

What’s the difference between functional testing and deployment testing in chatbot development?

Functional testing and deployment testing serve distinct purposes in the development of chatbots. Functional testing focuses on making sure the chatbot works as designed. This involves checking its responses to different inputs, verifying that all features function properly, and running regression tests to ensure updates don’t disrupt existing capabilities.

Meanwhile, deployment testing takes place after the chatbot is developed and is geared toward evaluating its performance in a live environment. This includes assessing how well it handles real user interactions, measuring response times, managing traffic loads, and collecting user feedback to confirm it aligns with user expectations.

How can I use open-source tools in my CI/CD pipeline to optimize chatbot testing and development?

Incorporating open-source tools into your CI/CD pipeline can simplify chatbot testing and development, leading to smoother deployments and greater reliability. Start by automating your testing processes with tools like Jenkins. Jenkins helps catch issues early by providing quick feedback on code changes, ensuring your chatbot performs as expected. Automated tests can compare chatbot responses to expected outputs, maintaining consistency as your chatbot evolves.

For added efficiency, tools like Selenium or Robot Framework can be valuable additions to your pipeline. These tools enable scalable and automated testing, making it easier to uphold quality as your chatbot grows. By embedding continuous quality assurance (QA) into your CI/CD workflow, you can identify and resolve potential problems before they impact production, saving both time and effort while reducing errors.